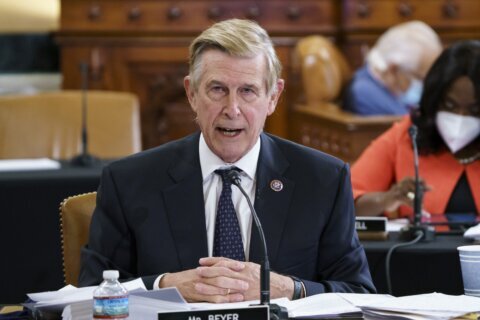

WASHINGTON (AP) — Don Beyer’s car dealerships were among the first in the U.S. to set up a website. As a representative, the Virginia Democrat leads a bipartisan group focused on promoting fusion energy. He reads books about geometry for fun.

So when questions about regulating artificial intelligence emerged, the 73-year-old Beyer took what for him seemed like an obvious step, enrolling at George Mason University to get a master’s degree in machine learning. In an era when lawmakers and emerging technology, Beyer’s journey is an outlier, but it highlights a broader effort by members of Congress to educate themselves about artificial intelligence as they consider laws that would shape its development.

Frightening to some, thrilling to others, baffling to many: Artificial intelligence has been called a transformative technology, a threat to democracy or even an . It will fall to members of Congress to figure out how to in a way that encourages its while mitigating the worst risks.

But first they have to understand what AI is, and what it isn’t.

“I tend to be an AI optimist,” told The Associated Press following a recent afternoon class on George Mason’s campus in suburban Virginia. “We can’t even imagine how different our lives will be in five years, 10 years, 20 years, because of AI. … There won’t be coming after us any time soon. But there are other deeper existential risks that we need to pay attention to.”

Risks like massive job losses in industries made obsolete by AI, programs that retrieve or , or that could be leveraged for , or . On the other side of the equation, onerous could stymie innovation, leaving the U.S. at a disadvantage as of AI.

Striking the right balance will require input not only from tech companies but also from the industry’s critics, as well as from the industries that AI may transform. While many Americans may have formed their ideas about AI from like or “The Matrix,” it’s important that lawmakers have a clear-eyed understanding of the technology, said Rep. Jay Obernolte, R-Calif., and the chairman of the House’s AI Task Force.

When lawmakers have questions about AI, Obernolte is one of the people they seek out. He studied engineering and applied science at the California Institute of Technology and earned an M.S. in artificial intelligence at UCLA. The California Republican also started his own video game company. Obernolte said he’s been “very pleasantly impressed” with how seriously his colleagues on both sides of the aisle are taking their responsibility to understand AI.

That shouldn’t be surprising, Obernolte said. After all, lawmakers regularly vote on bills that touch on complicated legal, financial, health and scientific subjects. If you think computers are complicated, check out the rules governing Medicaid and Medicare.

Keeping up with the pace of technology has challenged Congress since the and the transformed the nation’s industrial and agricultural sectors. Nuclear power and weaponry is another example of a highly technical subject that lawmakers have had to contend with in recent decades, according to Kenneth Lowande, a University of Michigan political scientist who has studied expertise and how it relates to policy-making in Congress.

Federal lawmakers have created several offices — the , the , etc. — to provide resources and specialized input when necessary. They also rely on staff with specific expertise on subject topics, including technology.

Then there’s another, more informal form of education that many members of Congress receive.

“They have interest groups and lobbyists banging down their door to give them briefings,” Lowande said.

Beyer said he’s had a lifelong interest in computers and that when AI emerged as a topic of public interest he wanted to know more. A lot more. Almost all of his fellow students are decades younger; most don’t seem that fazed when they discover their classmate is a congressman, Beyer said.

He said the classes, which he fits in around his busy congressional schedule — are already paying off. He’s learned about the development of AI and the challenges facing the field. He said it’s helped him understand the challenges — , — and the possibilities, like improved cancer diagnoses and more efficient supply chains.

Beyer is also learning how to write computer code.

“I’m finding that learning to code — which is thinking in this sort of mathematical, algorithmic step-by-step, is helping me think differently about a lot of other things — how you put together an office, how you work a piece of legislation,” Beyer said.

While a computer science degree isn’t required, it’s imperative that lawmakers understand AI’s implications for the economy, , , education, personal privacy and intellectual property rights, according to Chris Pierson, CEO of the cybersecurity firm BlackCloak.

“AI is not good or bad,” said Pierson, who formerly worked in Washington for the Department of Homeland Security. “It’s how you use it.”

The work of safeguarding AI has already begun, though it’s the executive branch leading the way so far. Last month, the White House unveiled that require federal agencies to show their use of AI isn’t harming the public. Under an issued last year, AI developers must provide information on the safety of their products.

When it comes to more substantive action, America is playing catchup to the , which recently governing the development and use of AI. The rules prohibit some uses — routine AI-enabled facial recognition by law enforcement, for one — while requiring other programs to submit information about safety and public risks. The landmark law is expected to serve as a blueprint for other nations as they contemplate their own AI laws.

As Congress begins that process, the focus must be on “mitigating potential harm,” said Obernolte, who said he’s optimistic that lawmakers from both parties can find common ground on ways to prevent the worst AI risks.

“Nothing substantive is going to get done that isn’t bipartisan,” he said.

To help guide the conversation lawmakers created a new AI task force (Obernolte is co-chairman), as well as an AI Caucus made up of lawmakers with a particular expertise or interest in the topic. They’ve invited experts to brief lawmakers on the technology and its impacts — and not just computer scientists and tech gurus either, but also representatives from different sectors that see their own risks and rewards in AI.

is the Democratic chairwoman of the caucus. She represents part of California’s Silicon Valley and recently that would require tech companies and social media platforms like Meta, Google or TikTok to identify and label AI-generated to ensure the public isn’t misled. She said the caucus has already proved its worth as a “safe place” place where lawmakers can ask questions, share resources and begin to craft consensus.

“There isn’t a bad or silly question,” she said. “You have to understand something before you can accept or reject it.”

Copyright © 2024 The Associated Press. All rights reserved. This material may not be published, broadcast, written or redistributed.